In 2025, AI agents aren’t just inside smart speakers and browsers. They’ve moved into mobile apps, acting on behalf of users, anticipating needs, and executing tasks without repeated input. Apps that adopt these autonomous agents are redefining convenience — and developers in both India and the US are building this future now.

🔍 What Is an AI Agent in Mobile Context?

Unlike traditional assistants that rely on one-shot commands, AI agents in mobile apps have:

- Autonomy: They can decide next steps without user nudges.

- Memory: They retain user context between sessions.

- Multi-modal interfaces: Voice, text, gesture, and predictive actions.

- Intent handling: They parse user goals and translate into actions.

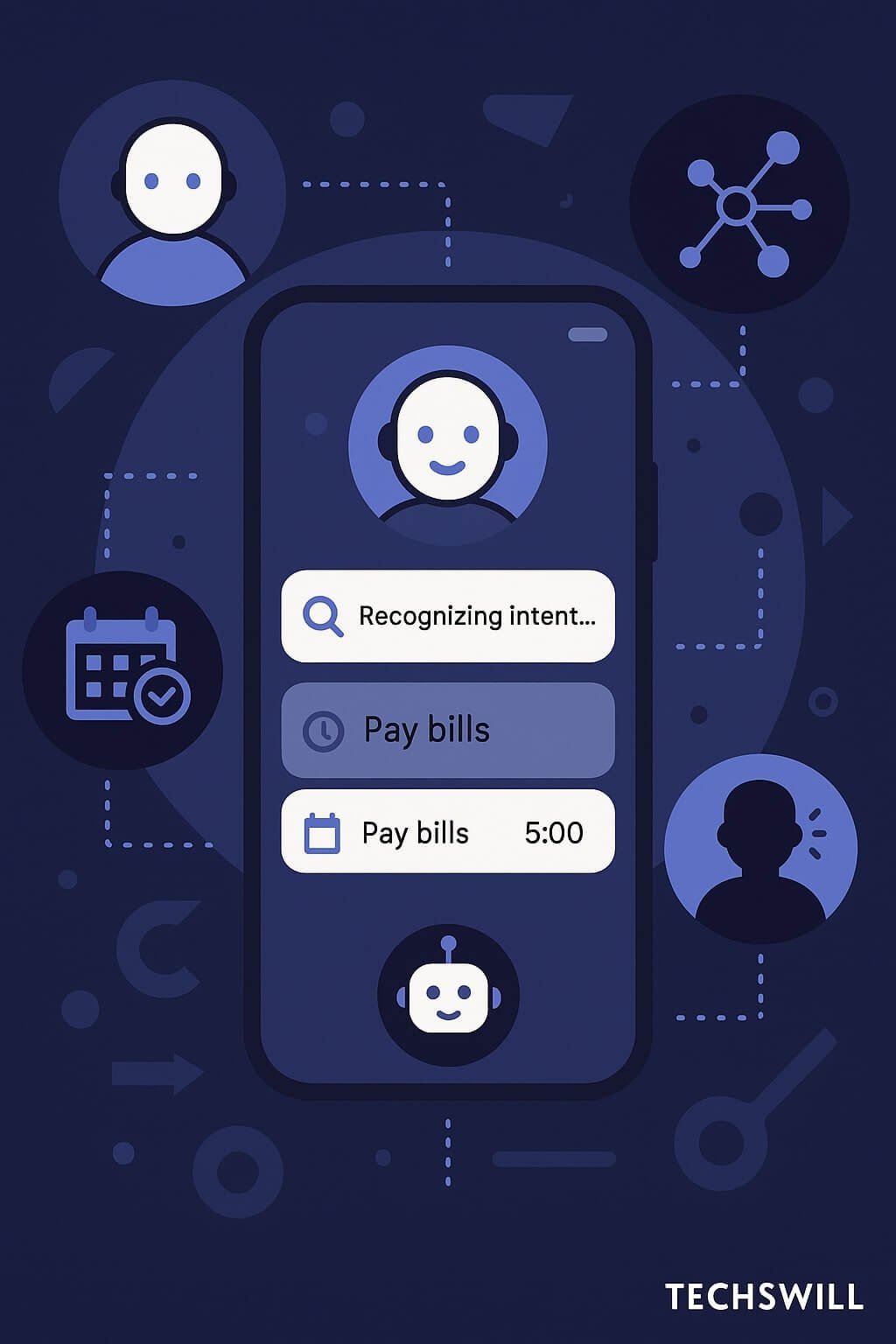

📱 Example: Task Agent in a Productivity App

Instead of a to-do list that only stores items, the AI agent in 2025 can:

- Parse task context from emails, calendar, voice notes.

- Set reminders, auto-schedule them into available time blocks.

- Update status based on passive context (e.g., you attended a meeting → mark task done).

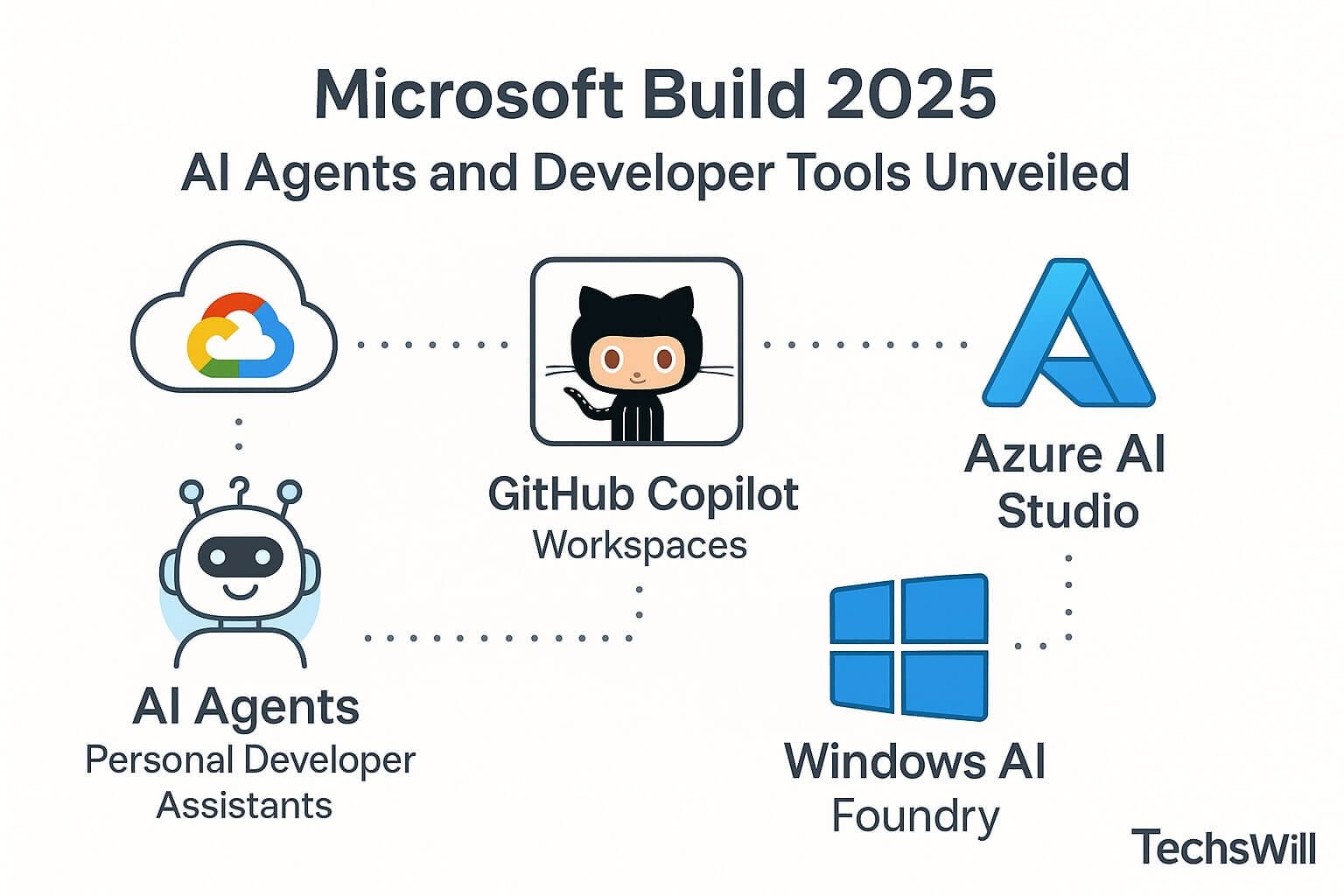

⚙️ Platforms Powering AI Agents

Gemini Nano + Android AICore

- On-device prompt sessions with contextual payloads

- Intent-aware fallback models (cloud + local blending)

- Seamless UI integration with Jetpack Compose & Gemini SDK

Apple Intelligence + AIEditTask + LiveContext

- Privacy-first agent execution with context injection

- Structured intent creation using AIEditTask types (summarize, answer, generate)

- Memory via Shortcuts, App Intents, and LiveContext streams

🌍 India vs US: Adoption Patterns

India

- Regional language agents: Translate, explain bills, prep forms in local dialects

- Financial agents: Balance check, UPI reminders, recharge agents

- EdTech: Voice tutors powered by on-device agents

United States

- Health/fitness: Personalized wellness advisors

- Productivity: Calendar + task + notification routing agents

- Dev tools: Code suggestion + pull request writing from mobile Git apps

🔄 How Mobile Agents Work Internally

- Context Engine → Prompt Generator → Model Executor → Action Engine → UI/Notification

- They rely on ephemeral memory + long-term preferences

- Security layers like intent filters, voice fingerprinting, fallback confirmation

🛠 Developer Tools

- PromptSession for Android Gemini

- LiveContext debugger for iOS

- LLMChain Mobile for Python/Flutter bridges

- Langfuse SDK for observability

- PromptLayer for lifecycle + analytics

📐 UX & Design Best Practices

- Show agent actions with animations or microfeedback

- Give users control: undo, revise, pause agent

- Use voice + touch handoffs smoothly

- Log reasoning or action trace when possible

🔐 Privacy & Permissions

- Log all actions + allow export

- Only persist memory with explicit user opt-in

- Separate intent permission from data permission