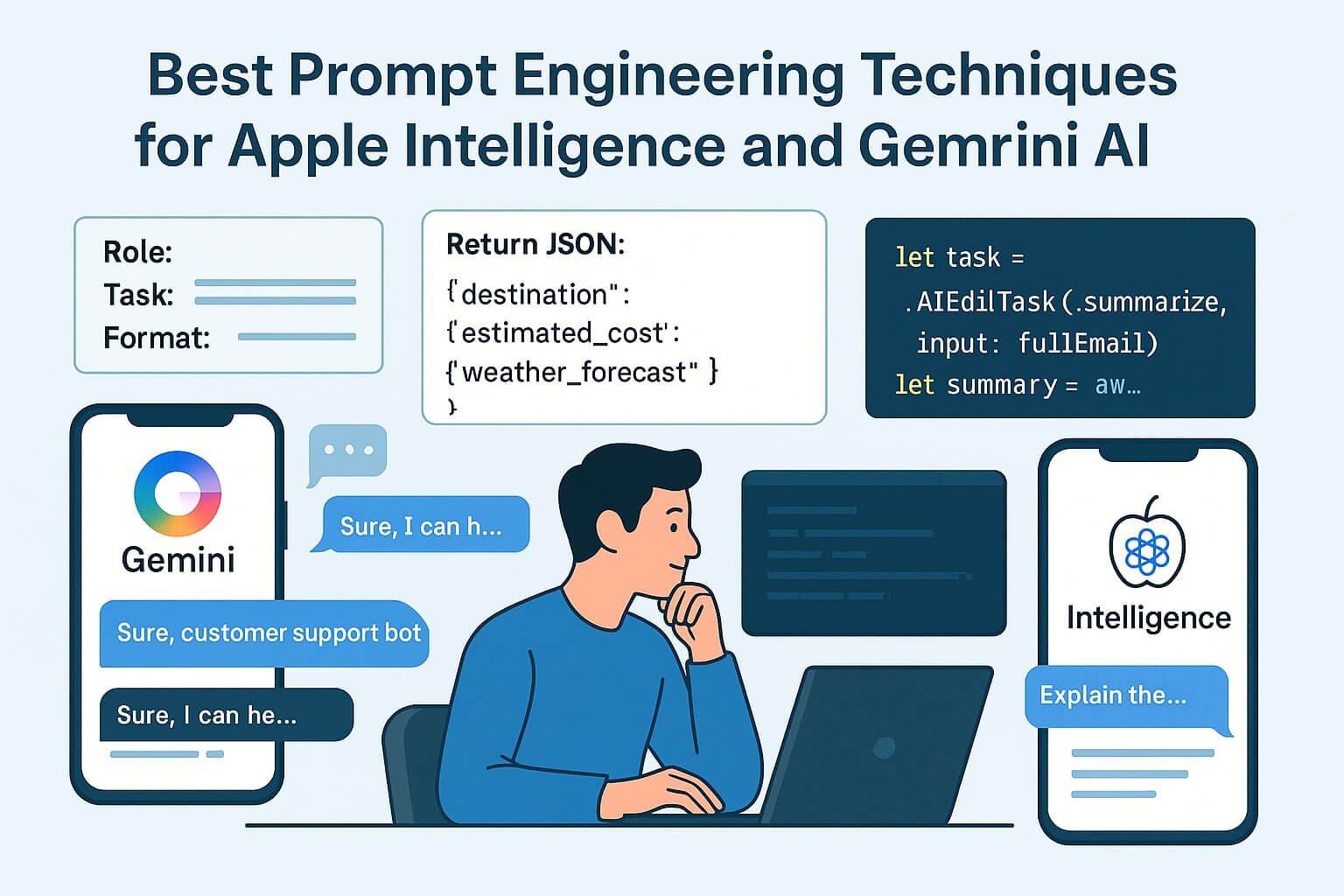

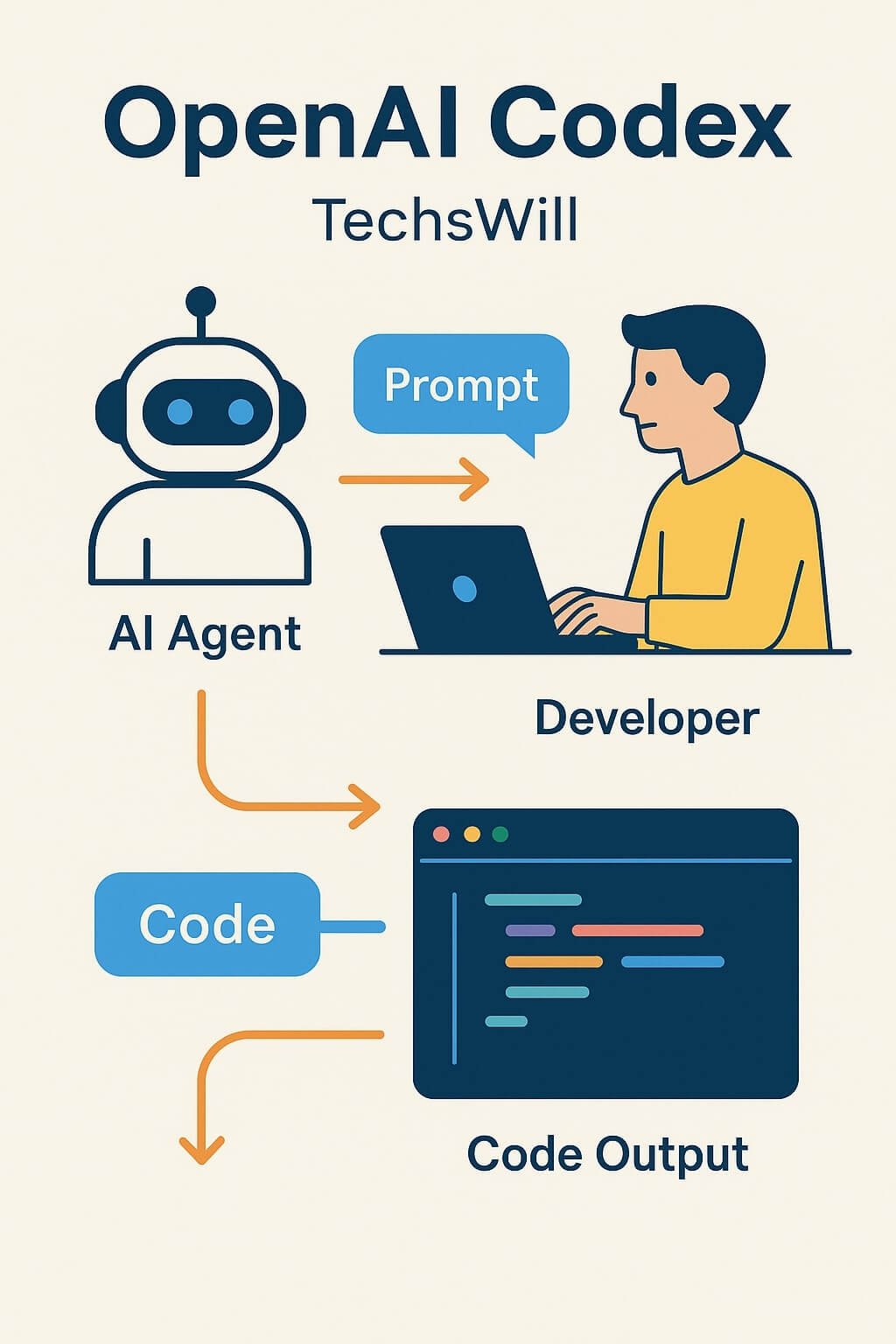

Prompt engineering is no longer just a hacky trick — it’s an essential discipline for developers working with LLMs (Large Language Models) in production. Whether you’re building iOS apps with Apple Intelligence or Android tools with Google Gemini AI, knowing how to structure, test, and optimize prompts can make the difference between a helpful assistant and a hallucinating chatbot.

🚀 What Is Prompt Engineering?

Prompt engineering is the practice of crafting structured inputs for LLMs to control:

- Output style (tone, length, persona)

- Format (JSON, bullet points, HTML, markdown)

- Content scope (topic, source context)

- Behavior (tools to use, functions to invoke)

Both Apple and Gemini provide prompt-centric APIs: Gemini via the AICore SDK, and Apple Intelligence via LiveContext, AIEditTask, and PromptSession frameworks.

📋 Supported Prompt Modes (2025)

| Platform | Input Types | Multi-Turn? | Output Formatting |

|---|---|---|---|

| Google Gemini | Text, Voice, Image, Structured | ✅ | JSON, Markdown, Natural Text |

| Apple Intelligence | Text, Contextual UI, Screenshot Input | ✅ | Plain text, System intents |

🧠 Prompt Syntax Fundamentals

Define Role + Task Clearly

Always define the assistant’s persona and the expected task.

// Gemini Prompt

You are a helpful travel assistant.

Suggest a 3-day itinerary to Kerala under ₹10,000.

// Apple Prompt with AIEditTask

let task = AIEditTask(.summarize, input: paragraph)

let result = await AppleIntelligence.perform(task)

Use Lists and Bullets to Constrain Output

"Explain the concept in 3 bullet points."

"Return a JSON object like this: {title, summary, url}"

Apply Tone and Style Modifiers

- “Reword this email to sound more enthusiastic”

- “Make this formal and executive-sounding”

In this in-depth guide, you’ll learn:

- Best practices for crafting prompts that work on both Gemini and Apple platforms

- Function-calling patterns, response formatting, and prompt chaining

- Prompt memory design for multi-turn sessions

- Kotlin and Swift code examples

- Testing tools, performance tuning, and UX feedback models

🧠 Understanding the Prompt Layer

Prompt engineering sits at the interface between the user and the LLM — and your job as a developer is to make it:

- Precise (what should the model do?)

- Bounded (what should it not do?)

- Efficient (how do you avoid wasting tokens?)

- Composable (how does it plug into your app?)

Typical Prompt Types:

- Query answering: factual replies

- Rewriting/paraphrasing

- Summarization

- JSON generation

- Assistant-style dialogs

- Function calling / tool use

⚙️ Gemini AI Prompt Structure

🧱 Modular Prompt Layout (Kotlin)

val prompt = """

Role: You are a friendly travel assistant.

Task: Suggest 3 weekend getaway options near Bangalore with budget tips.

Format: Use bullet points.

""".trimIndent()

val response = aiSession.prompt(prompt)

This style — Role + Task + Format — consistently yields more accurate and structured outputs in Gemini.

🛠 Function Call Simulation

val prompt = """

Please return JSON:

{

"destination": "",

"estimated_cost": "",

"weather_forecast": ""

}

""".trimIndent()

Gemini respects formatting when it’s preceded by “return only…” or “respond strictly as JSON.”

🍎 Apple Intelligence Prompt Design

🧩 Context-Aware Prompts (Swift)

let task = AIEditTask(.summarize, input: fullEmail)

let summary = await AppleIntelligence.perform(task)

Apple encourages prompt abstraction into task types. You specify .rewrite, .summarize, or .toneShift, and the system handles formatting implicitly.

🗂 Using LiveContext

let suggestion = await LiveContext.replySuggestion(for: lastUserInput)

inputField.text = suggestion

LiveContext handles window context, message history, and active input field to deliver contextual replies.

🧠 Prompt Memory & Multi-Turn Techniques

Gemini: Multi-Turn Session Example

val session = PromptSession.create()

session.prompt("What is Flutter?")

session.prompt("Can you compare it with Jetpack Compose?")

session.prompt("Which is better for Android-only apps?")

Gemini sessions retain short-term memory within prompt chains.

Apple Intelligence: Stateless + Contextual Memory

Apple prefers stateless requests, but LiveContext can simulate memory via app-layer state or clipboard/session tokens.

🧪 Prompt Testing Tools

🔍 Gemini Tools

- Gemini Debug Console in Android Studio

- Token usage, latency logs

- Prompt history + output diffing

🔍 Apple Intelligence Tools

- Xcode AI Simulator

- AIProfiler for latency tracing

- Prompt result viewers with diff logs

🎯 Common Patterns for Gemini + Apple

✅ Use Controlled Scope Prompts

"List 3 tips for beginner React developers."

"Return output in a JSON array only."

✅ Prompt Rewriting Techniques

– Rephrase user input as an AI-friendly command – Use examples inside the prompt (“Example: X → Y”) – Split logic: one prompt generates, another evaluates

📈 Performance Optimization

- Minimize prompt size → strip whitespace

- Use async streaming (Gemini supports it)

- Cache repeat prompts + sanitize

👨💻 UI/UX for Prompt Feedback

– Always show a spinner or token stream – Show “Why this answer?” buttons – Allow quick rephrases like “Try again”, “Make shorter”, etc.

📚 Prompt Libraries & Templates

Template: Summarization

"Summarize this text in 3 sentences:"

{{ userInput }}

Template: Rewriting

"Rewrite this email to be more formal:"

{{ userInput }}

🔬 Prompt Quality Evaluation Metrics

- Fluency

- Relevance

- Factual accuracy

- Latency

- Token count / cost