Updated: May 2025

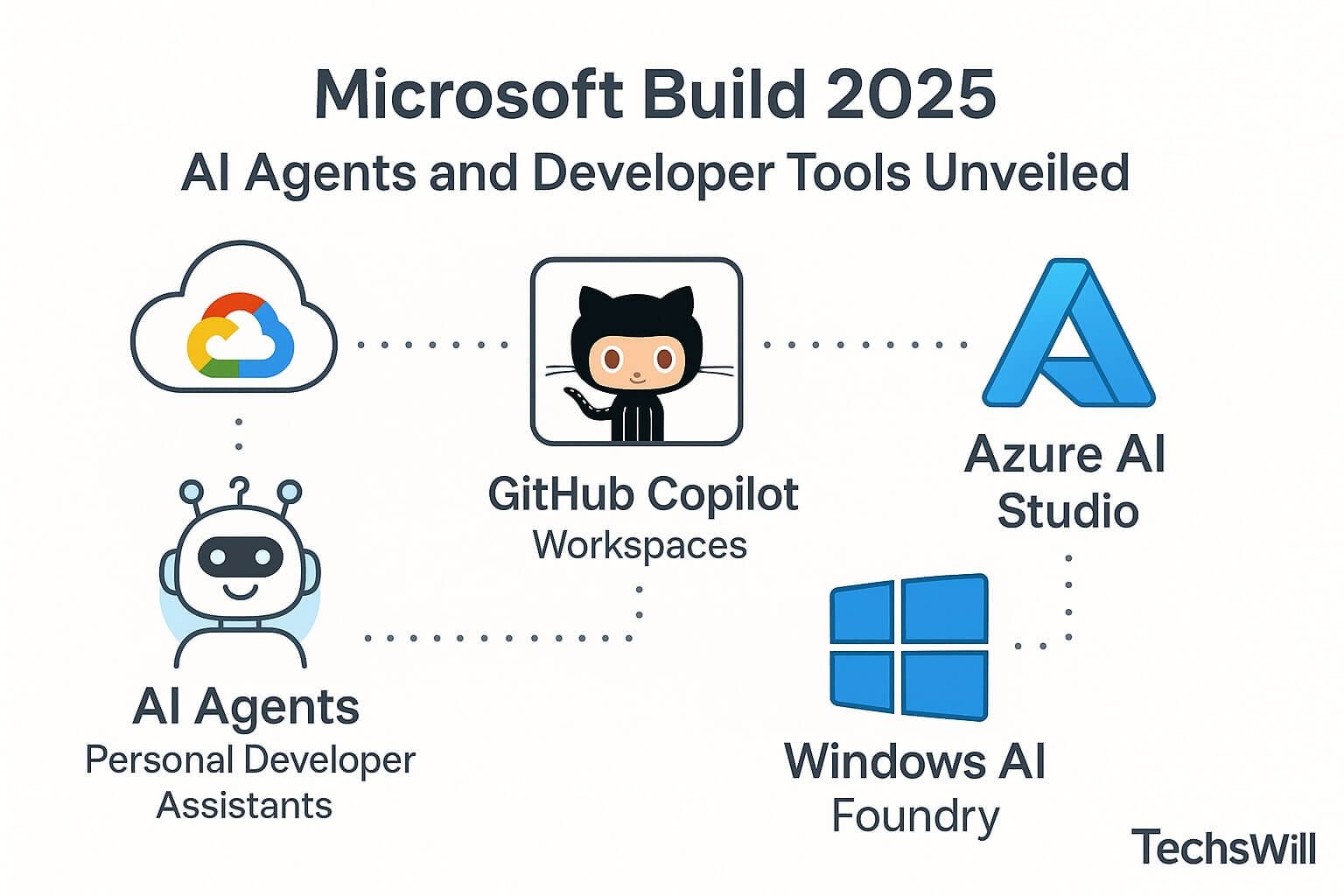

Microsoft Build 2025 placed one clear bet: the future of development is deeply collaborative, AI-assisted, and platform-agnostic. From personal AI agents to next-gen coding copilots, the announcements reflect a broader shift in how developers write, debug, deploy, and collaborate.

This post breaks down the most important tools and platforms announced at Build 2025 — with a focus on how they impact day-to-day development, especially for app, game, and tool engineers building for modern ecosystems.

🤖 AI Agents: Personal Developer Assistants

Microsoft introduced customizable AI Agents that run in Windows, Visual Studio, and the cloud. These agents can proactively assist developers by:

- Understanding codebases and surfacing related documentation

- Running tests and debugging background services

- Answering domain-specific questions across projects

Each agent is powered by Azure AI Studio and built using Semantic Kernel, Microsoft’s open-source orchestration framework. You can use natural language to customize your agent’s workflow, or integrate it into existing CI/CD pipelines.

💻 GitHub Copilot Workspaces (GA Release)

GitHub Copilot Workspaces — first previewed in late 2024 — is now generally available. These are AI-powered, goal-driven environments where developers describe a task and Copilot sets up the context, imports dependencies, generates code suggestions, and proposes test cases.

Real-World Use Cases:

- Quickly scaffold new Unity components from scratch

- Build REST APIs in ASP.NET with built-in auth and logging

- Generate test cases from Jira ticket descriptions

GitHub Copilot has also added deeper **VS Code** and **JetBrains** IDE integrations, enabling inline suggestions, pull request reviews, and even agent-led refactoring.

📦 Azure AI Studio: Fine-Tuned Models + Agents

Azure AI Studio is now the home for building, managing, and deploying AI agents across Microsoft’s ecosystem. With simple UI + YAML-based pipelines, developers can:

- Train on private datasets

- Orchestrate multi-agent workflows

- Deploy to Microsoft Teams, Edge, Outlook, and web apps

The Studio supports OpenAI’s GPT-4-Turbo and Gemini-compatible models out of the box, and now offers telemetry insights like latency breakdowns, fallback triggers, and per-token cost estimates.

🪟 Windows AI Foundry

Microsoft unveiled the Windows AI Foundry, a local runtime engine designed for inference on edge devices. This allows developers to deploy quantized models directly into UWP apps or as background AI services that work without internet access.

Supports:

- ONNX and custom ML models (including Whisper + LLama 3)

- Real-time summarization and captioning

- Offline voice-to-command systems for games and AR/VR apps

⚙️ IntelliCode and Dev Home Upgrades

Visual Studio IntelliCode now includes AI-driven performance suggestions, real-time code comparison with OSS benchmarks, and environment-aware linting based on project telemetry. Meanwhile, Dev Home for Windows 11 has received an upgrade with:

- Live terminal previews of builds and pipelines

- Integrated dashboards for GitHub Actions and Azure DevOps

- Chat-based shell commands using AI assistants

Game devs can even monitor asset import progress, shader compilation, or CI test runs in real-time from a unified Dev Home UI.

🧪 What Should You Try First?

- Set up a GitHub Copilot Workspace for your next module or script

- Spin up an AI agent in Azure AI Studio with domain-specific docs

- Download Windows AI Foundry and test on-device summarization

- Install Semantic Kernel locally to test prompt chaining