Mobile app development in 2025 is no longer just about building fast and releasing often. Developers in India and the United States are navigating a new landscape shaped by AI-first design, edge computing, cross-platform innovation, and changing user behavior.

This post outlines the top mobile app development trends in 2025 — based on real-world shifts in technology, policy, user expectations, and platform strategies. Whether you’re an indie developer, a startup engineer, or part of an enterprise team, these insights will help you build better, faster, and smarter apps in both India and the US.

📱 1. AI-First Development is Now the Norm

Every app in 2025 has an AI layer — whether it’s user-facing or behind the scenes. Developers are now expected to integrate AI in:

- Search and recommendations

- Contextual UI personalization

- In-app automation (auto summaries, reply suggestions, task agents)

In the US, apps use OpenAI, Claude, and Gemini APIs for everything from support to content generation. In India, where data costs and privacy matter more, apps leverage on-device LLMs like LLaMA 3 8B or Gemini Nano for offline inference.

Recommended Tools:

llama.cppfor local modelsGoogle AICoreSDK for Gemini integrationApple IntelligenceAPIs for iOS 17+

🚀 2. Edge Computing Powers Real-Time Interactions

Thanks to 5G and better chipsets, mobile apps now push processing to the edge.

This includes:

- Voice-to-text with no server calls

- ML image classification on-device

- Real-time translations (especially in Indian regional languages)

With tools like CoreML, MediaPipe, and ONNX Runtime Mobile, edge performance rivals the cloud — without the latency or privacy risks.

🛠 3. Cross-Platform Development is Smarter (Not Just Shared Code)

2025’s cross-platform strategy isn’t just Flutter or React Native. It’s about:

- Smart module reuse across iOS and Android

- UI that adapts to platform idioms — like SwiftUI + Compose

- Shared core logic (via Kotlin Multiplatform or C++)

What’s Popular:

- India: Flutter dominates fast MVPs for fintech, edtech, and productivity

- US: SwiftUI and Compose win in performance-critical apps like banking, fitness, and health

Engineers are splitting UI and logic more clearly — and using tools like Jetpack Glance and SwiftData to create reactive systems faster.

💸 4. Monetization Strategies Are Getting Smarter (And Subtle)

Monetizing apps in 2025 isn’t about intrusive ads or overpriced subscriptions — it’s about smart, value-first design.

US Trends:

- AI-powered trials: Unlock features dynamically after usage milestones

- Flexible subscriptions: Tiered access + family plans using Apple ID sharing

- Referral-based growth loops for productivity and wellness tools

India Trends:

- Microtransactions: ₹5–₹20 IAPs for personalization or one-time upgrades

- UPI deep linking for 1-click checkouts in low-ARPU regions

- Ad-supported access with low-frequency interstitials + rewards

💡 Devs use Firebase Remote Config and RevenueCat to test pricing and adapt in real time based on user behavior and geography.

👩💻 5. Developer Experience Is Finally a Product Priority

Engineering productivity is a CEO metric in 2025. Mobile teams are investing in:

- Cloud-based CI/CD (GitHub Actions, Bitrise, Codemagic)

- Linting + telemetry baked into design systems

- Onboarding bots: AI assistants explain legacy code and branching policies

Startups and scale-ups in both India and the US are hiring Platform Engineers to build better internal tooling and reusable UI libraries.

🔮 6. Generative UI and Component Evolution

Why code the same UI a hundred times? In 2025:

- Devs use LLMs like Gemini + Claude to generate UI components

- “Design as code” tools like Galileo and Magician write production-ready SwiftUI

- Teams auto-document UI using GPT-style summary bots

In India, small teams use these tools to bridge the gap between designers and React/Flutter devs. In the US, mid-sized teams pair design systems with LLM QA tooling.

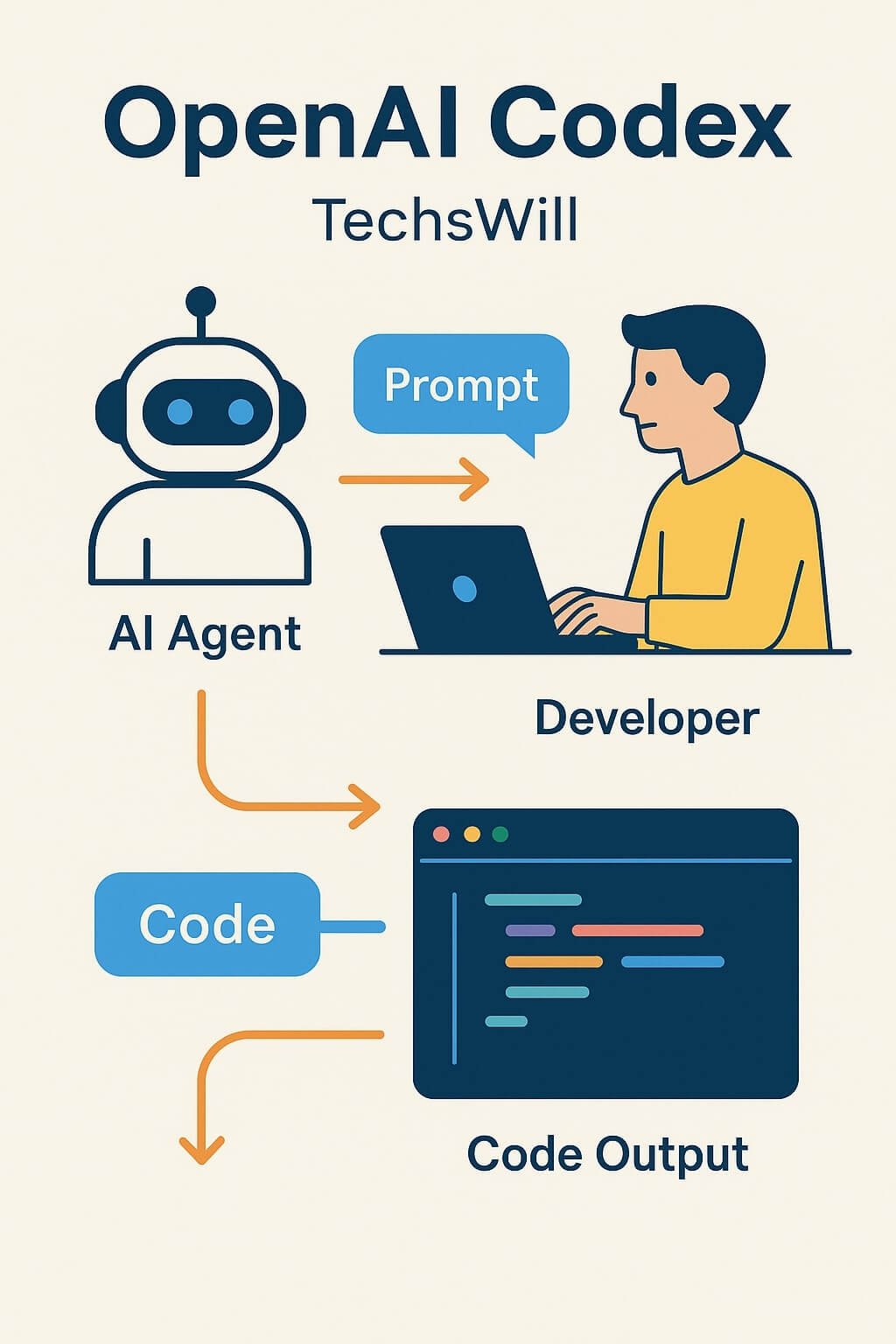

📱 7. Mobile-First AI Agents Are the New Superpower

Gemini Nano and Apple Intelligence allow you to run custom agents:

- For auto-fill, summarization, reply suggestions, planning

- Inside keyboard extensions, Spotlight, and notification trays

Mobile agents can act on context: recent actions, clipboard content, user intents.

Tools to Explore:

- Gemini AI with AICore + PromptSession

- Apple’s AIEditTask and LiveContext APIs

- LangChain Mobile (community port)

🎓 8. Developer Career Trends: India vs US in 2025

The developer job market is evolving fast. While core coding skills still matter, 2025 favors hybrid engineers who can work with AI, low-code, and DevOps tooling.

India-Specific Trends:

- Demand for AI + Flutter full-stack devs is exploding

- Startups look for developers with deep Firebase and Razorpay experience

- Regional language support (UI, text-to-speech, input validation) is a hiring differentiator

US-Specific Trends:

- Companies seek engineers who can write and train LLM prompts + evaluate results

- React Native + Swift/Compose dual-experience is highly valued

- Compliance awareness (COPPA, HIPAA, ADA, CCPA) is now expected in product discussions

🛠️ Certifications like “AI Engineering for Mobile” and “LLM Security for Devs” are now appearing on resumes globally.

⚖️ 9. AI Policy, Privacy & App Store Rules

Governments and platforms are catching up with AI usage. In 2025:

- Apple mandates privacy disclosures for LLMs used in iOS apps (via Privacy Manifest)

- Google Play flags apps that send full chat logs to external LLM APIs

- India’s draft Digital India Act includes AI labeling and model sourcing transparency

- The US continues to push self-regulation but is expected to release a federal AI framework soon

💡 Developers need to plan for on-device fallback, consent-based prompt storage, and signed model delivery.

🕶️ 10. AR/VR Enters Mainstream Use — Beyond Games

AR is now embedded into health apps, finance tools, and shopping. Apple’s visionOS and Google’s multisensory updates are reshaping what mobile means.

Examples:

- In India: AR tools help visualize furniture in apartments, try-on jewelry, and track physical fitness

- In the US: Fitness mirrors, AR-guided finance onboarding, and in-store navigation are becoming app standards

🧩 Cross-platform libraries like Unity AR Foundation and Vuforia remain relevant, but lightweight native ARKit/ARCore options are growing.