Introduction: Growth Now Lives Where Tech, Data and Content Meet

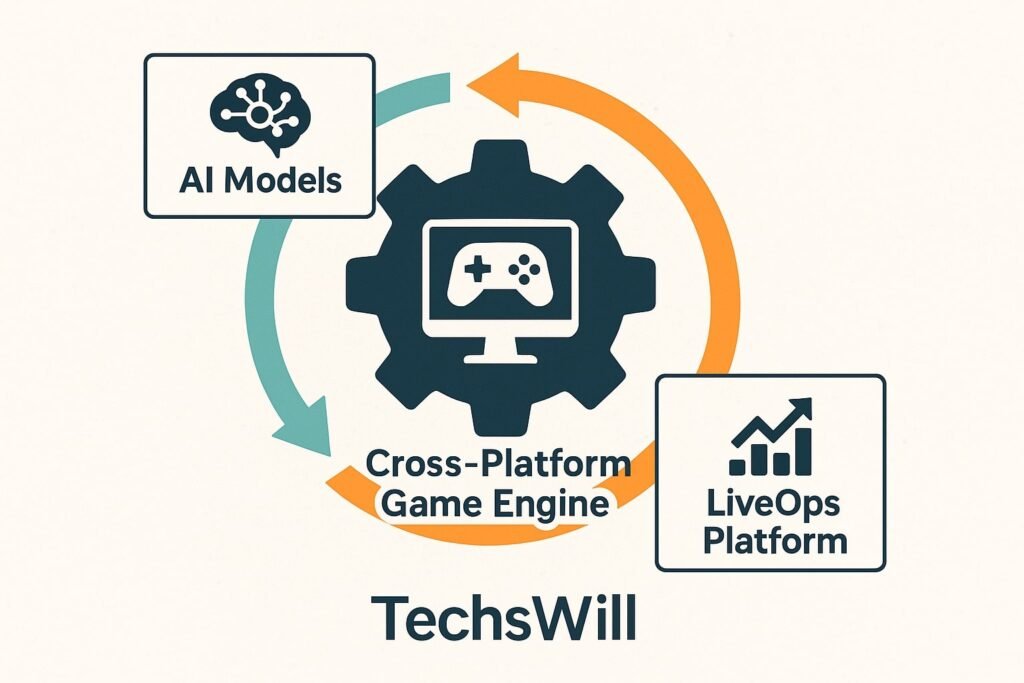

The game growth is no longer “just” about a smart UA campaign or a clever sale. The studios that win are the ones that combine three powerful forces into a single system:

- AI to personalize, predict, and automate decisions.

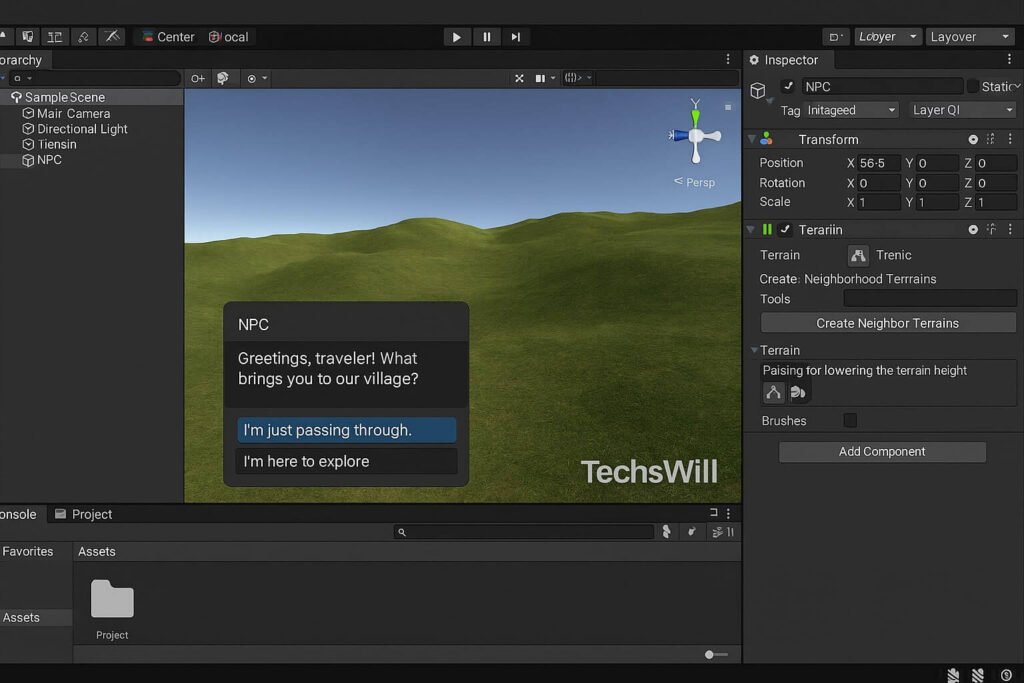

- Cross-platform engines like Unity and Unreal to ship everywhere fast and maintain one core codebase.

- LiveOps to keep the game evolving with events, offers, content drops and community moments.

Top studios aren’t treating these as separate tracks. They’re building one integrated growth machine: engines that support fast deployment, AI models that shape content and monetization, and LiveOps teams that orchestrate everything across devices and markets.

This guide breaks down how that system works in practice, which capabilities matter most, and what smaller teams can copy right now without AAA budgets.

Why This Shift Is Happening Now

Several trends are converging to make this shift unavoidable:

- Player expectations: Gamers expect ongoing events, fresh content, and cross-device continuity as a baseline, not a bonus.

- Rising UA costs: CPI keeps climbing; studios can’t rely on brute-force paid acquisition. Retention and LTV are the only sustainable levers.

- Cross-platform engines are mature: Engines like Unity and Unreal are built for portability and service-style games, with runtime analytics, A/B testing hooks, and backend integrations.

- AI is quietly everywhere: From content generation to predictive segmentation, many studios are using AI behind the scenes—even if they don’t shout about it publicly.

So the real question isn’t “Should we use AI, LiveOps, or cross-platform?” It’s: “How fast can we wire these together into one growth engine?”

Pillar 1 — Cross-Platform Engines as the Growth Substrate

Engines like Unity and Unreal are no longer just “rendering and physics”. The best engines are the ones that:

- Compile to multiple platforms reliably (mobile, PC, console, web, sometimes even UGC ecosystems like Fortnite).

- Expose deep analytics and event hooks to LiveOps platforms.

- Integrate cleanly with backend services for inventory, economy, and segmentation.

- Support content pipelines that can be automated or AI-augmented.

What top teams configure inside the engine

High-performing studios treat the engine as a growth-aware runtime, not just a renderer:

- Event hooks for every meaningful action: session start, churn risk triggers, economy milestones, social engagements.

- Config-driven UX: menus, offers, entry points, and event banners are parameterized so LiveOps can change them without new builds.

- Cross-platform entitlement logic: if a player owns content on mobile, the entitlement follows them on PC or cloud versions.

This engine configuration is what lets AI and LiveOps actually do something useful later.

Pillar 2 — LiveOps as the Revenue Engine

LiveOps has moved from “nice-to-have events” to the backbone of the business. Well-run games now operate like live services: multiple events running in parallel, seasonal passes, collabs, rotating modes, and community-based moments.

What top LiveOps teams do differently

- Run more events, more often: Industry benchmarks show a sharp increase in concurrent events and seasonal structures across top live-service titles.

- Segment events: There are baseline events for everyone, but high-value and high-skill cohorts get tailored challenges or premium tracks.

- Blend economy and emotion: Events aren’t just “discounts”; they are framed around narrative beats, social goals, and collection arcs.

- Test and iterate: Everything—pricing, pacing, entry friction, rewards—is A/B tested and then scaled.

Without LiveOps, AI and cross-platform reach can’t fully convert into long-term revenue. LiveOps is the engine room where content, offers, and analytics are turned into actual business outcomes.

Pillar 3 — AI as the Brain Around the Whole System

AI is less about “crazy experimental features” and more about systemic advantages:

- Personalization: AI models predict which players respond to which events, bundles, or difficulty curves.

- Forecasting: LiveOps teams use predictive metrics for retention, churn, and revenue impact before rolling out big beats.

- Content assistance: AI helps build variants of levels, quests, challenges, and even social copy at scale.

- Operational automation: ML-based systems decide who enters which experiment, when to end tests, or when to auto-adjust offers.

Example: AI-assisted LiveOps loop

- Observe: Engine sends granular events (session patterns, purchase funnels, feature usage) to your data stack.

- Model: AI clusters players (e.g., “social whales”, “challenge seekers”, “decorators”, “dabblers”).

- Act: LiveOps config is generated or recommended per segment (event variations, reward tables, difficulty curves).

- Learn: Post-event metrics feed back into models to refine rules and predictions.

How Top Studios Combine All Three in Practice

Let’s stitch this together into something concrete. Here’s how a strong studio might run a major seasonal campaign in 2025:

1. Cross-platform foundation

The game runs on Unity or Unreal and ships to iOS, Android, PC, and maybe a UGC platform like Fortnite’s creator ecosystem. All platforms share a unified backend for identity, inventory, and LiveOps config.

2. AI-informed campaign design

Data scientists and LiveOps PMs look at last season’s metrics: which cohorts drove revenue, who churned, who engaged with social features. AI models highlight:

- Likely win-back cohorts for reactivation offers.

- High-LTV cohorts who respond to cosmetic or battle-pass content.

- Mid-segment players at risk who need more progression clarity or easier early wins.

3. Segment-specific LiveOps tracks

The same seasonal event exists in multiple “flavors”:

- A core free track for everyone.

- Deeper, challenge-heavy tracks for high-skill players with exclusive cosmetics.

- Soft-onboarding runs for returning players with boosted rewards and simpler goals.

4. Engine-driven execution

All of this is controlled via config loaded at runtime: the engine reads segment, shows the correct version of events, surfaces context-aware offers, and pushes notifications to the right device.

5. Near-real-time optimization

During the event, AI models continuously monitor engagement and monetization. If participation drops in a certain region or device cohort, LiveOps can adjust difficulties, rewards, or entry friction without shipping a new build.

Learnings from 2025 Trends & Benchmarks

Recent reports and benchmarks highlight a few consistent patterns across successful studios:

- Hybrid monetization wins: Games combining IAPs, subscriptions, battle passes, and LiveOps-driven events outperform single-model monetization.

- Event cadence keeps rising: Top live-service games run more frequent, layered events than ever before.

- Feature depth + accessibility: “Hybrid-casual” games (simple core, deep meta) dominate revenue growth, backed by LiveOps and data-driven tuning.

- AI helps behind the scenes: Personalization, segmentation, and content testing increasingly rely on AI, even when studios don’t market it.

- Cross-platform reach is expected: Engines that support multi-device play while preserving a single player profile and economy give studios a long-term advantage.

What Smaller Teams Can Borrow Without AAA Resources

You don’t need a 50-person data team to use the same patterns. Here’s a pragmatic playbook for smaller studios:

1. Start with engine instrumentation

- Log core events: session start/end, level completion, soft-/hard-currency flows, key feature usage.

- Make anything growth-related config-driven (offer placements, banners, difficulty toggles, event gates).

2. Build a simple, repeatable LiveOps calendar

- Rotate 2–3 reliable event types (progress race, collection album, leaderboard, coop challenge).

- Add a monthly “anchor” event (season pass, major collab, big economy beat).

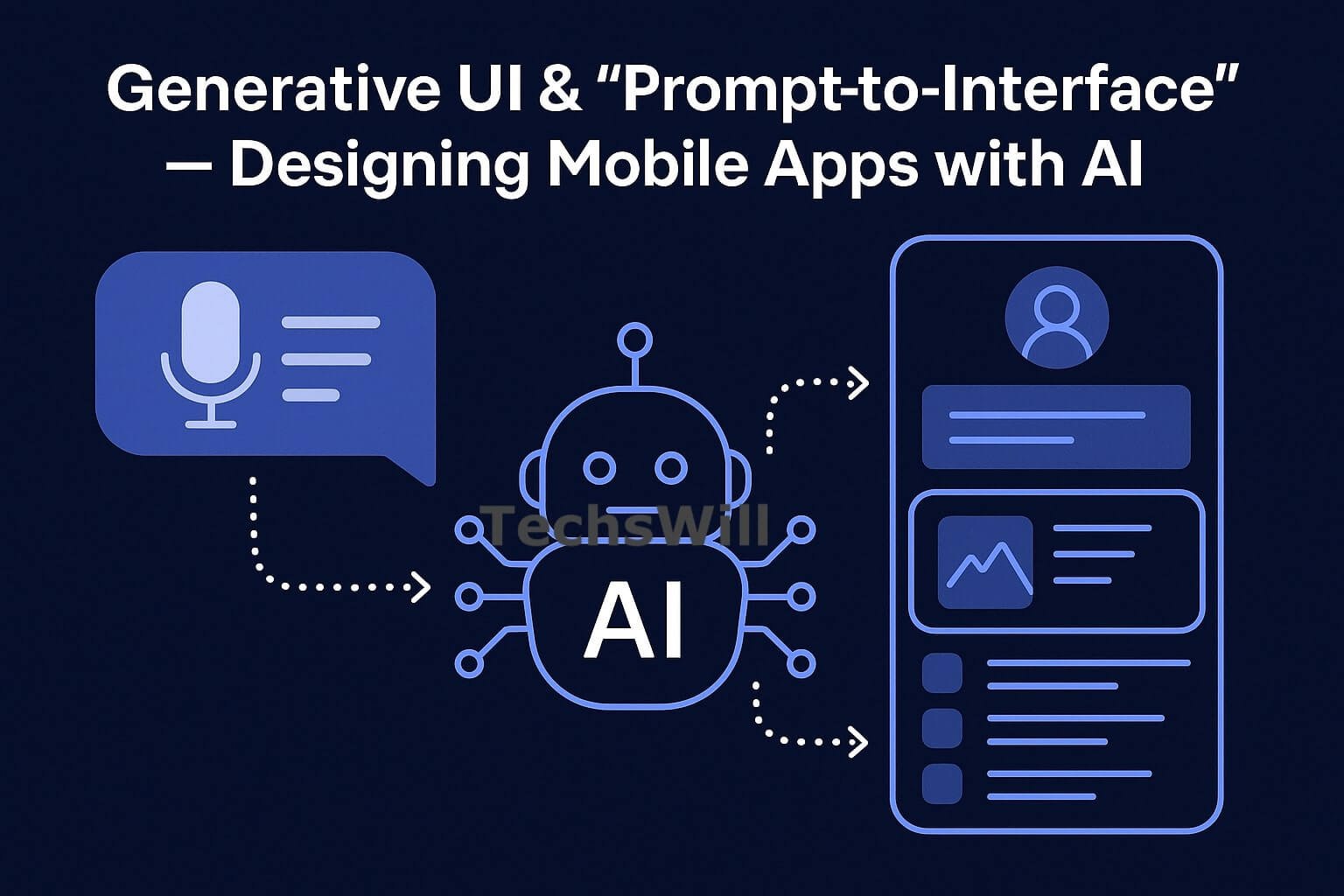

3. Use AI where it saves time first

- AI-assisted copy for event naming, descriptions, and localized variations.

- Basic churn prediction and segmentation via off-the-shelf tools or analytics platforms.

- Use generative tools to prototype variations of levels or cosmetics; keep final art curated.

4. Pick one key KPI per quarter

Instead of chasing every metric, pick one priority at a time: D1/D7 retention, ARPDAU, or event participation. Align LiveOps experiments and AI usage to that single goal, then move to the next once you see steady improvement.

Common Pitfalls When Combining AI, Engines & LiveOps

Not every studio gets this right. Some common traps:

- Over-automating: Letting AI change too much, too fast without human oversight; this can break economies or confuse players.

- Under-instrumentation: Trying to do AI without enough clean data—garbage in, garbage out.

- Ignoring creative constraints: Pushing generic, over-optimized offers that feel “samey” and kill brand identity.

- Platform-blind design: Assuming all platforms behave the same; mobile vs PC vs cloud often have different play patterns and tolerances.

Putting It All Together: Your 90-Day Action Plan

Here’s a concrete 90-day roadmap to begin combining AI, cross-platform engines and LiveOps:

- Days 1–30: Instrument engine events, move key growth surfaces to config (offers, banners, event entries), and design a basic 3-month LiveOps calendar.

- Days 31–60: Introduce simple AI use-cases: churn prediction, basic cohort segmentation, and AI-assisted copy for events and offers.

- Days 61–90: Start running segmented events, experiment with at least one AI-informed pricing or reward-curve test, and set up post-mortems after each major event.

By the end of that window, you don’t just “use AI” or “run LiveOps”—you have the outlines of a joined-up system that can scale with your game.

Conclusion: The Studios That Connect the Dots Will Win

The big story of 2025 isn’t just AI in isolation, or cross-platform play, or LiveOps. It’s the way they amplify each other when used as a unified strategy. Engines give you reach. LiveOps turns that reach into long-term relationships. AI makes both smarter, faster, and more efficient.

Whether you’re running a mid-sized mobile hit or building your first hybrid-casual title, the opportunity is the same: treat technology, content, and operations as one system. The studios that connect those dots—deliberately and early—will own retention and monetization in the years ahead.

Suggested Posts

- Unity Security Alert 2025: High-Severity Vulnerability (CVE-2025-59489) — What Developers Must Do Now

- Unity AI in 2025: Next-Gen NPCs, Procedural Worlds & Auto-Prototyping Explained