Updated: May 2025

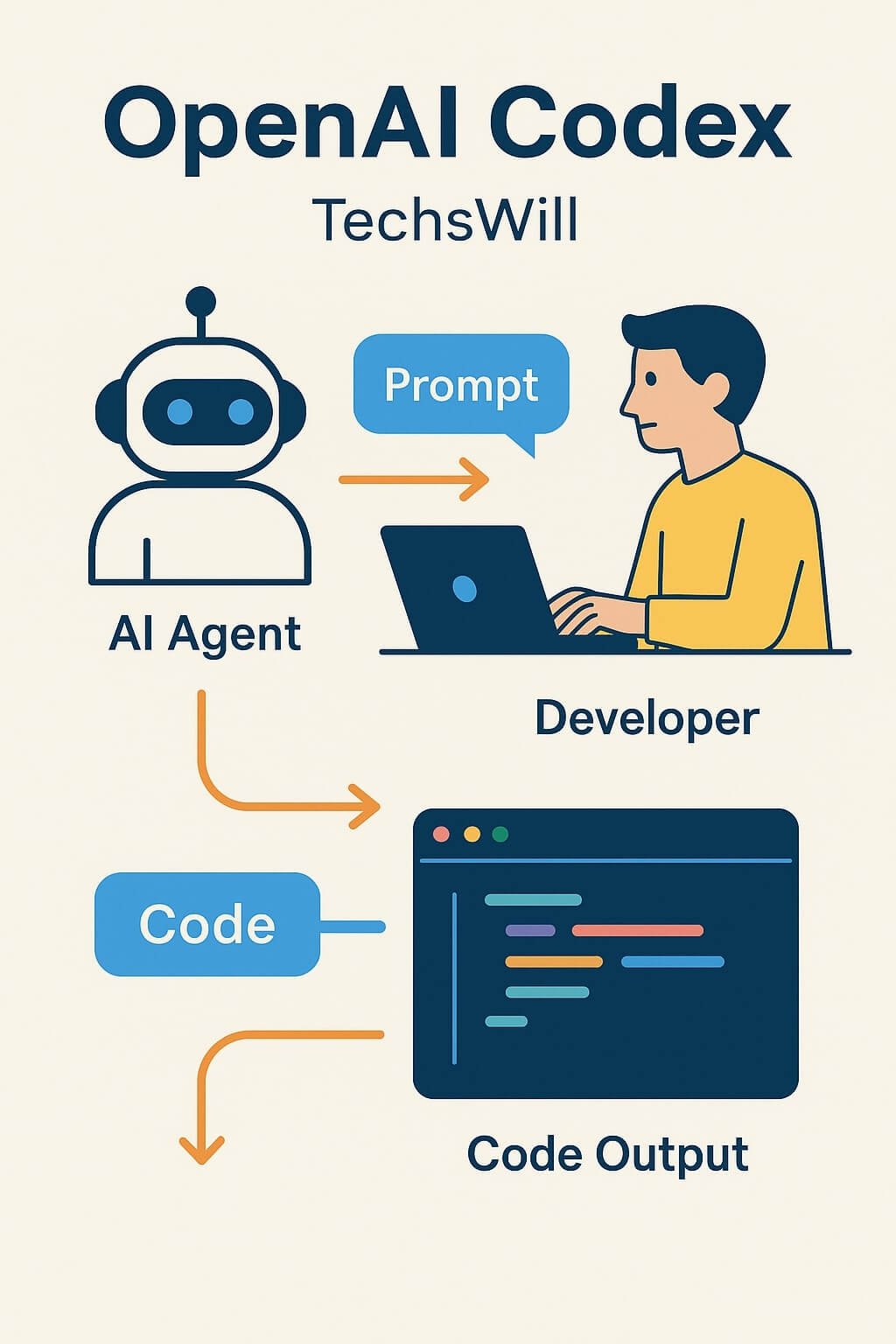

The way we write software is evolving. With the rise of AI-powered coding tools like OpenAI Codex, developers are no longer just the authors of code — they’re becoming its collaborators, curators, and supervisors. Codex is ushering in a new era of autonomous coding agents that can write, understand, and debug code across multiple languages and frameworks. This post takes a deep dive into how Codex works, its implications for software engineering, and how developers can responsibly integrate it into their workflow.

🤖 What is OpenAI Codex?

Codex is an advanced AI system developed by OpenAI, built on top of the GPT architecture. It has been trained on a vast corpus of code from GitHub, Stack Overflow, documentation, and open-source projects. Codex understands both natural language and programming syntax, enabling it to perform tasks like:

- Auto-completing code from a simple comment or prompt

- Writing full functions or classes in Python, JavaScript, TypeScript, Go, and more

- Translating code between languages

- Identifying bugs and proposing fixes

- Answering questions about unfamiliar code

Developers can interact with Codex via the OpenAI API, GitHub Copilot, or embed it into their own developer tools using the Codex SDK.

🧠 How Codex Works Behind the Scenes

Codex uses transformer-based neural networks that analyze both text and code. The model is context-aware, meaning it can analyze nearby comments, variable names, and patterns to make intelligent predictions. Developers benefit from this by receiving:

- Contextual suggestions tailored to the project

- Smart completions with correct syntax and indentation

- In-line documentation generation

Example Prompt → Output:

# Prompt:

# Create a function that fetches weather data and returns temperature in Celsius

def get_weather(city_name):

Codex Output:

import requests

def get_weather(city_name):

api_key = "your_api_key"

url = f"https://api.weatherapi.com/v1/current.json?key={api_key}&q={city_name}"

response = requests.get(url)

data = response.json()

return data['current']['temp_c']

📈 Where Codex Excels

- Rapid prototyping: Build MVPs in hours, not days

- Learning tool: See how different implementations are structured

- Legacy code maintenance: Understand and refactor old codebases quickly

- Documentation: Auto-generate comments and docstrings

⚠️ Limitations and Developer Responsibilities

While Codex is incredibly powerful, it is not perfect. Developers must be mindful of:

- Incorrect or insecure code: Codex may suggest insecure patterns or APIs

- License issues: Some suggestions may mirror code seen in the training data

- Over-reliance: It’s a tool, not a substitute for real problem solving

It’s crucial to treat Codex as a co-pilot, not a pilot — all generated code should be tested, reviewed, and validated before production use.

🛠️ Getting Started with Codex

- Sign up for the OpenAI Developer Platform

- Try GitHub Copilot in your IDE

- Experiment with APIs via Postman or curl

- Watch prompt engineering tutorials on OpenAI Dev Day replays