Updated: May 2025

At Google I/O 2025, Google delivered one of its most ambitious keynotes in recent years, revealing an expansive vision that ties together multimodal AI, immersive hardware experiences, and conversational search. From Gemini AI’s deeper platform integrations to the debut of Android XR and a complete rethink of how search functions, the announcements at I/O 2025 signal a future where generative and agentic intelligence are the default — not the exception.

🚀 Gemini AI: From Feature to Core Platform

In past years, AI was a feature — a smart reply in Gmail, a better camera mode in Pixel. But Gemini AI has now evolved into Google’s core intelligence engine, deeply embedded across Android, Chrome, Search, Workspace, and more. Gemini 2.5, the newest model released, powers some of the biggest changes showcased at I/O.

Gemini Live

Gemini Live transforms how users interact with mobile devices by allowing two-way voice and camera-based AI interactions. Unlike passive voice assistants, Gemini Live listens, watches, and responds with contextual awareness. You can ask it, “What’s this ingredient?” while pointing your camera at it — and it will not only recognize the item but suggest recipes, calorie count, and vendors near you that stock it.

Developer Tools for Gemini Agents

- Function Calling API: Like OpenAI’s equivalent, developers can now define functions that Gemini calls autonomously.

- Multimodal Prompt SDK: Use images, voice, and video as part of app prompts in Android apps.

- Long-context Input: Gemini now handles 1 million token context windows, suitable for full doc libraries or user histories.

These tools turn Gemini from a chat model into a full-blown digital agent framework. This shift is critical for startups looking to reduce operational load by automating workflows in customer service, logistics, and education via mobile AI.

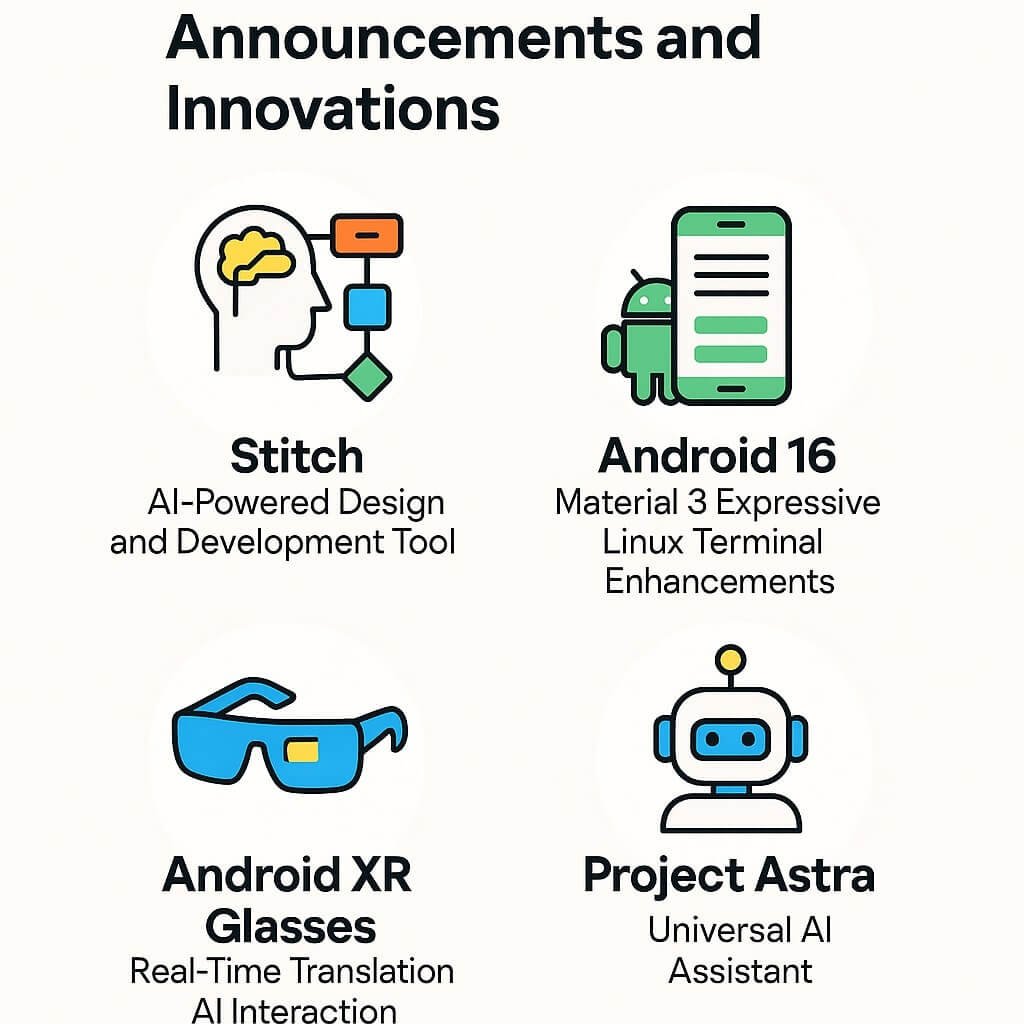

🕶️ Android XR: Google’s Official Leap into Mixed Reality

Google confirmed what the developer community anticipated: Android XR is now an official OS variant tailored for head-worn computing. In collaboration with Samsung and Xreal, Google previewed a new line of XR smart glasses powered by Gemini AI and spatial interaction models.

Core Features of Android XR:

- Contextual UI: User interfaces that float in space and respond to gaze + gesture inputs

- On-device Gemini Vision: Live object recognition, navigation, and transcription

- Developer XR SDK: A new set of Unity/Unreal plugins + native Android libraries optimized for rendering performance

Developers will be able to preview XR UI with the Android Emulator XR Edition, set to release in July 2025. This includes templates for live dashboards, media control layers, and productivity apps like Notes, Calendar, and Maps.

🔍 Search Reinvented: Enter “AI Mode”

AI Mode is Google Search’s biggest UX redesign in a decade. When users enter a query, they’re presented with a multi-turn chat experience that includes:

- Suggested refinements (“Add timeframe”, “Include video sources”, “Summarize forums”)

- Live web answers + citations from reputable sites

- Conversational threading so context is retained between questions

For developers building SEO or knowledge-based services, AI Mode creates opportunities and challenges. While featured snippets and organic rankings still matter, AI Mode answers highlight data quality, structured content, and machine-readable schemas more than ever.

How to Optimize for AI Mode as a Developer:

- Use

schema.orgmarkup and FAQs - Ensure content loads fast on mobile with AMP or responsive design

- Provide structured data sources (CSV, JSON feeds) if applicable

📱 Android 16: Multitasking, Fluid Design, and Linux Dev Tools

While Gemini and XR stole the spotlight, Android 16 brought quality-of-life upgrades developers will love:

Material 3 Expressive

A dynamic evolution of Material You, Expressive brings more animations, stateful UI components, and responsive layout containers. Animations are now interruptible, and transitions are shared across screens natively.

Built-in Linux Terminal

Developers can now open a Linux container on-device and run CLI tools such as vim, gcc, and curl. Great for debugging apps on the fly or managing self-hosted services during field testing.

Enhanced Jetpack Libraries

androidx.xr.*for spatial UIandroidx.gesturefor air gesturesandroidx.visionfor camera/Gemini interop

These libraries show that Google is unifying the development story for phones, tablets, foldables, and glasses under a cohesive UX and API model.

🛠️ Gemini Integration in Developer Tools

Google announced Gemini Extensions for Android Studio Giraffe, allowing AI-driven assistance directly in your IDE:

- Code suggestion using context from your current file, class, and Gradle setup

- Live refactoring and test stub generation

- UI preview from prompts: “Create onboarding card with title and CTA”

While these feel similar to GitHub Copilot, Gemini Extensions focus heavily on Android-specific boilerplate reduction and system-aware coding.

🎯 Implications for Startups, Enterprises, and Devs

For Startup Founders:

Agentic AI via Gemini will reduce the need for MVP headcount. With AI summarization, voice transcription, and simple REST code generation, even solo founders can build prototypes with advanced UX features.

For Enterprises:

Gemini’s Workspace integrations allow LLM-powered data queries across Drive, Sheets, and Gmail with security permissions respected. Expect Gemini Agents to replace macros, approval workflows, and basic dashboards.

For Indie Developers:

Android XR creates a brand-new platform that’s open from Day 1. It may be your next moonshot if you missed the mobile wave in 2008 or the App Store gold rush. Apps like live captioning, hands-free recipes, and context-aware journaling are ripe for innovation.