Unity developers are entering a period where generative systems stop being demos and start becoming daily tools. This week’s research and community updates show tangible paths to: (1) run conversational, personality-consistent NPCs within Unity; (2) use reasoning-guided generation for levels and systems; and (3) bootstrap projects from natural language into runnable Unity prototypes. Alongside these advances, Unity also issued a critical security patch this week—so modern AI features must ship with modern security habits.

Unity NPCs: From Dialog Trees to Consistent Personalities

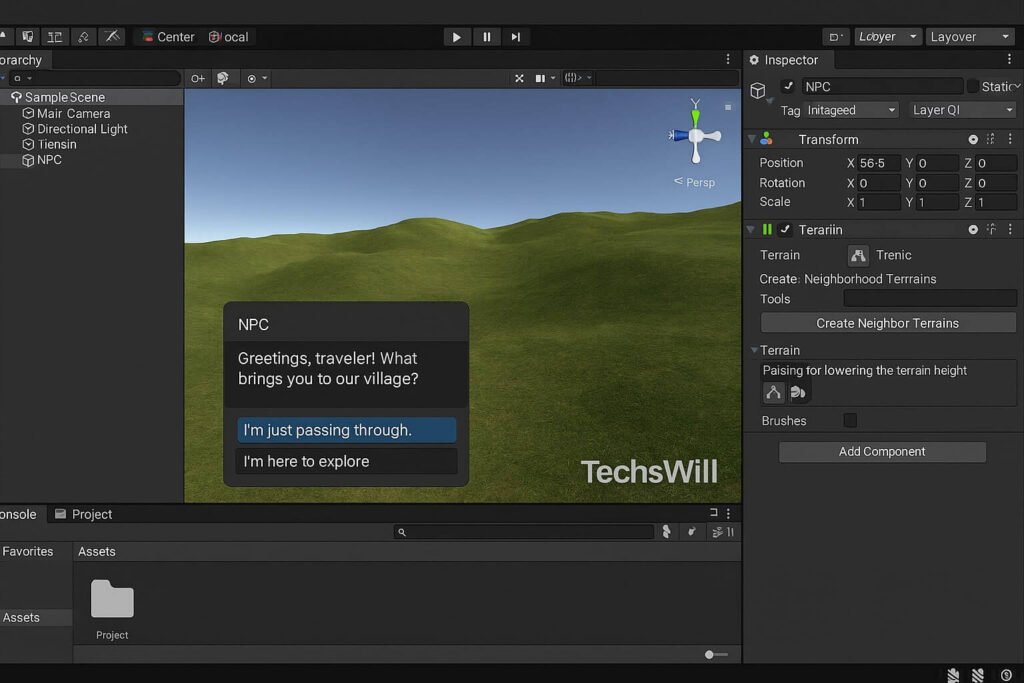

Developers have shared fresh approaches for long-term memory, personality consistency, and multi-character conversations that run locally. The goal is to avoid brittle tree logic and deliver characters that feel coherent across long sessions. A community showcase this week highlights a local, open approach that keeps NPCs “in character,” remembers past choices, and evolves relationships mathematically—all without breaking immersion.

Why it matters: On-device inference reduces latency, lowers costs, and improves reliability for dialogue-heavy games. It also aligns with privacy-first design, since sensitive play data can remain on player devices.

Reasoning-Driven Procedural Worlds

Traditional procedural content uses deterministic rules. This week’s examples and research point toward reasoning-guided generation, where agents place and connect content with an understanding of gameplay intent. The result is less “random noise” and more purposeful worlds: layouts that react to player state, pacing, and goals—while remaining reproducible via seeds and guardrails.

Design notes

- Blend classic procedural (noise, BSP, wave-function collapse) with LLM agents for context rather than raw content.

- Keep authorship: designers specify constraints, tone, difficulty curves, and forbidden states.

- Instrument everything: log seeds, prompts, and outcomes to compare runs and tune coherency.

Text-to-Prototype: Auto-Generating Unity Projects from Natural Language

New research released within the past week demonstrates an end-to-end pipeline that turns natural language requirements into executable 3D Unity projects. A multi-agent system parses intent, generates C# systems, constructs scenes, and iterates with an automated test-time loop until the project compiles and runs. While still research, the approach offers a practical blueprint for production: use agents to stub systems, wire scenes, and accelerate greyboxing—then let humans refine mechanics, polish UX, and optimize performance.

Pragmatic workflow for studios

- Start with a tight, structured “spec prompt” (core loop, verbs, victory/defeat, camera, input).

- Generate a scaffold only: scene hierarchy, input maps, component stubs, and placeholder content.

- Gate every step with CI: compile checks, basic playmode tests, and lint rules to keep diffs clean.

- Transition to human-led tuning early: feel, readability, and theme still need designers.

Performance: On-Device Inference Without Melting Budgets

AI-assisted systems can be CPU/GPU-hungry. To keep frame times predictable:

- Update cadence: Tick AI reasoning on a budget (e.g., every N frames) and interleave agents.

- Work schedulers: Route heavy ops to background threads and jobs; prefer Burst/Jobs where possible.

- Memory hygiene: Use pooled buffers and stream model weights; unload between scenes to prevent spikes.

- Fallbacks: Provide rule-based fallbacks when models aren’t available or budgets are tight.

Testing: From Determinism to “Within-Bounds” AI

Procedural and generative systems need new QA patterns:

- Seeded runs: Recreate worlds and dialogues deterministically by logging seeds and prompts.

- Scenario oracles: Define acceptable ranges (e.g., path lengths, encounter density, economy balance) and flag outliers.

- Behavior snapshots: Capture NPC memory states and compare deltas across builds.

Security: Ship AI Faster—And Safer—After This Week’s Patch

This week, Unity disclosed and patched a high-severity engine vulnerability affecting versions back to 2017.1. Teams should immediately upgrade via Unity Hub or the Download Archive and apply vendor guidance for shipped builds. If you maintain live games, plan a hotfix path and validate your asset-loading surfaces. Treat this as an opportunity to harden your AI pipelines—especially any that evaluate or load external content at runtime.

Hardening checklist

- Upgrade to the patched Unity versions and re-build client/server artifacts.

- Review file loading, mod/plugin paths, and any dynamic content ingestion.

- Sandbox AI I/O: strict schema validation for prompts, outputs, and save data.

- Re-sign builds, re-verify platform store requirements, and run AV/anti-tamper scans.

Hands-On: Unity Implementation Patterns

Local NPC Dialogue with Personality

- Model wrapper: abstract providers (local vs cloud) behind a common interface.

- Personas as data: store traits, goals, and boundaries in ScriptableObjects.

- Context windows: compress history with summaries; pin canonical facts to avoid drift.

- Designer controls: expose “levers” (temperature, topic rails, tone) in custom inspectors.

Reasoned Procedural Layouts

- Two-phase build: fast classical generation → AI pass to label, connect, and pace content.

- Constraint graphs: prevent unreachable states; ensure quest hooks have valid anchors.

- Debug overlays: visualize nav coverage, spawn heatmaps, and narrative beats.

Text-to-Prototype Scaffolding

- Prompt → YAML spec → codegen: keep a human-readable intermediate to diff and review.

- Guardrails: deny unsafe APIs by default; require explicit allowlists in the generator.

- CI gates: compile, minimal playmode test, and vetting of generated assets/paths.

What to Build This Month

- A dialogue-driven social sim prototype using local inference and personality rails.

- An action-roguelite greybox where an agent labels rooms and connects encounters by difficulty.

- A vertical slice auto-scaffold: input, camera, interaction, and save/load stubs generated from a one-page spec.

Each project is small enough to finish, but rich enough to pressure-test memory, performance budgets, and testing strategies.